Scientists from the Intelligent Sensing Group investigated the possibility of detecting weeds, using a drone (UAV) and transfer learning applied to video analysis, as an example of hogweed.

Scientists from the Intelligent Sensing Group investigated the possibility of detecting weeds, using a drone (UAV) and transfer learning applied to video analysis, as an example of hogweed. The use of UAVs avoids the spreading of weed seeds, which is the case with ground-based monitoring tools. The application of transfer learning techniques makes it possible to reduce the payload of the UAV from two cameras (video and multispectral) to one, eliminating the costly multispectral camera without losing the accuracy of the weed detection. The study is published in the IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing.

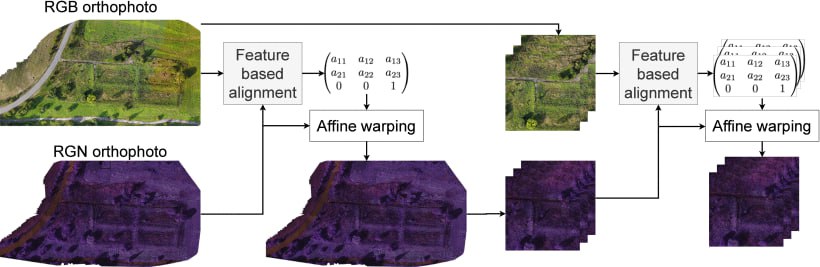

Fig. 1. Greedy pipeline of RGB and RGN orthophotos alignment and dataset creation for training neural networks. At the first stage, two orthophotos are aligned with a single affine transform, and the alignment is further refined by a second region-adaptive transform.

Initial research using a single RGB video camera showed that detection errors occur: The camera can detect the weed in the visible range by the colour and shape of the hogweed leaf. Thus, the trained neural network 'detected' hogweed even on tree branches. To avoid such errors, it was decided to use a multispectral camera. Obviously, adding a multispectral camera increases the payload of the UAV and the cost of the solution.

"We show that combining information from an additional multispectral camera mounted on a drone can improve detection quality, which can then be maintained even with a single RGB camera by introducing an additional convolutional neural network trained using transfer learning to produce multispectral image analogs directly from the RGB stream."

"This approach makes it possible to either abandon the multispectral equipment on the drone or, if only an RGB camera is on hand, improve segmentation performance at the expense of a small increase in computing budget," says one of the authors of the study, senior lecturer Andrei Somov.

To support this claim, the Intelligent Sensing Group team of scientists conducted an extensive network performance study in both real-time and offline mode, where they achieved at least a 1.1% increase in mIoU metric when evaluating on the RGB stream from a camera with emulated multispectral imagery and 1.4% when evaluating on orthophoto data.

"Our results show that proper optimisation ensures complete elimination of the multispectral camera from the flight task by adding a preprocessing step to the segmentation network without loss of quality," added PhD student Yaroslav Koshelev.